Advantages:

- Helps scale pen-testing efforts by assisting human testers with AI

- Learns from each test and gets better over time

- Built to match real-world workflows using open-source tools and prompts

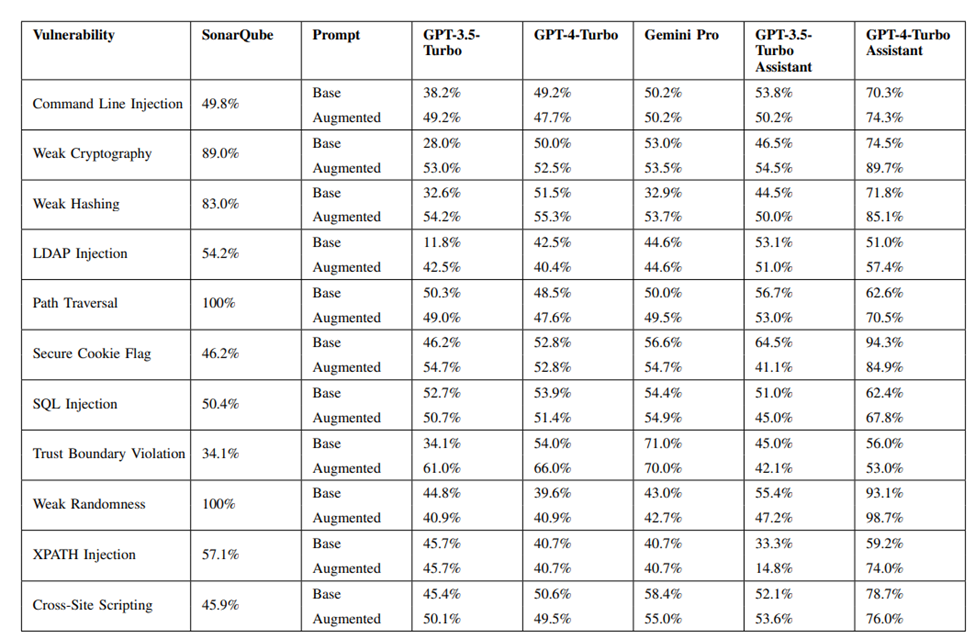

- Outperforms traditional tools like SonarQube with prompt customization

Summary:

Penetration testing is critical for finding security flaws in software. But there’s a major gap: there aren’t enough skilled testers to meet the growing need. Traditional tools like SonarQube are helpful but often give too many false alarms, which wastes time. Human testers end up ignoring alerts or missing real issues. What’s needed is a system that can understand context, adapt to new code, and learn from its mistakes just like a real teammate.

This technology introduces an AI co-pilot powered by large language models (LLMs), designed to work side-by-side with human testers. It starts with a strong base model and then improves by learning from tester feedback. This is done through prompt engineering and active learning, which lets the AI agent adapt to new code, tools, and tester needs. In tests using 2,740 real vulnerability examples, the co-pilot matched or beat SonarQube’s performance especially when using OpenAI’s GPT-4 with the Assistant API. This AI assistant is perfect for companies, defense teams, and software vendors looking to automate and enhance their cybersecurity workflows.

This table shows how the AI co-pilot, powered by GPT-4 Assistant and enhanced with prompts, outperforms traditional tools like SonarQube across several types of software vulnerabilities.

Desired Partnerships:

- License

- Sponsored Research

- Co-Development